People love theorizing about AI. From podcasts to dinner parties, from social media to the folks at the table next to you, those two letters are on everyone’s lips.

I’m no exception. I often find myself engaged in conversations about what AI might or might not do, and sometimes I’m even one of those guys at the table next to you talking too loudly about it.

Will AI take all of our jobs? Will AI turn us into a pile of paperclips? Etc etc.

The more I’ve participated in these conversations, and also worked with AI in the real world, the more I’ve realized that these conversations rest on a faulty foundation…

There is no one thing that is AI.

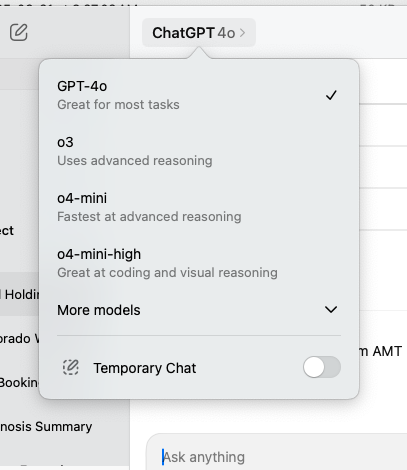

“AI” as we know it today is a marketing term. It’s a term we’ve collectively agreed upon to refer to a group of technologies: the transformer, large language models, GPUs, chat bots, etc. Just because we now have all agreed to call these things “AI” doesn’t mean it automatically leads to Nick Bostrom’s infinite paperclipper or von Neumann/Kurzweil’s singularity.

In fact, the more I’ve used AI in both personal and professional settings, the more I see the parallels to other technological platform shifts I’ve experienced, i.e. the internet, mobile, and the cloud. None of which turned my biological form into an office supply, Clippy notwithstanding.

I can’t help but notice a similar language game starting to take place to the one played with respect to machine learning vs AI. Through the 2010’s and early 2020’s we saw huge efficiency gains in the implementation of ML algorithms that could be trained on large amounts of data to make statistically accurate (ie useful) predictions.

There are tons of examples of this: lead scoring, social media feeds, product recommendations, ad targeting, and inventory forecasting to name a few. Companies that developed tools to commercialize these algorithms loved to call their products “AI platforms” (guilty as charged) but that was generally viewed as marketing fluff and not “real AI.”

Even as Google started leveraging the transformer to do next word prediction in searches despite whatever gobbledegook you fat fingered in, and make real progress with self driving, no one thought of any of that as AI.

So there became a frequently cited paradox that went something like “everything that’s possible today is just ML, everything that can’t yet be done is AI.”

Then came LLMs that when combined with a chat interface blew through the Turing test before we could even debate and we were collectively like “fine now you can call that AI.” Nevermind that this AI was simply an extension of the next word (token) prediction that Google was using in search, just with more data thrown at it and presented in a new form factor.

As humans do, we’ve moved the goal posts again, and replaced “ML” with “AI” and “AI” with “ASI” or “AGI.” So now everything that we’re doing today is “just AI” and we’re not seeing all that paperclip/singularity stuff yet because that won’t come until we have “Artificial Super Intelligence.” For which again, definitions are murky at best.

This is usually where people like to make the counter point that this time is different and that we’re on an exponential curve that hasn’t inflected yet. That might well be true, but I think it’s a helpful reminder that it’s been over 5 years since the launch of the GPT3 API and over 3 years since the launch of ChatGPT! What is this, an exponential curve for ants?

Using OpenAI products as the starting point for what constitutes “AI” is also being generous as it ignores all the real “ML” applications I cited earlier. As a point of comparison to the mobile era, the iPhone Appstore was launched in July 2008 and Uber was launched in July 2010. I’m not saying we’re at the end of the AI S-curve, but I don’t think we can still claim we’re at the beginning either.

That said, I’d concede that it does feel like things are changing faster this time, but if you zoom out, the pace starts to look a lot more like the other platform shifts I referenced. We’ve seen awesome tools get released and people can do things that would’ve been sci-fi 10 years ago, but we’re not seeing GDP suddenly grow 10%. We’re also seeing some job displacement due to AI, and will probably see more, but overall job growth is still on a positive trend.

We’ve seen generational companies get started and some incumbents get disrupted, and I’d anticipate more of that to come. However, just because we arbitrarily switched from using the letters ML to the letters AI does not suddenly turn a prediction into a prophecy.